# Monoloco library [](https://pepy.tech/project/monoloco)

Continuously tested on Linux, MacOS and Windows: [](https://github.com/vita-epfl/monoloco/actions?query=workflow%3ATests)

This library is based on three research projects for monocular/stereo 3D human localization (detection), body orientation, and social distancing. Check the __video teaser__ of the library on [__YouTube__](https://www.youtube.com/watch?v=O5zhzi8mwJ4).

---

> __MonStereo: When Monocular and Stereo Meet at the Tail of 3D Human Localization__

> _[L. Bertoni](https://scholar.google.com/citations?user=f-4YHeMAAAAJ&hl=en), [S. Kreiss](https://www.svenkreiss.com),

[T. Mordan](https://people.epfl.ch/taylor.mordan/?lang=en), [A. Alahi](https://scholar.google.com/citations?user=UIhXQ64AAAAJ&hl=en)_, ICRA 2021

__[Article](https://arxiv.org/abs/2008.10913)__ __[Citation](#Citation)__ __[Video](https://www.youtube.com/watch?v=pGssROjckHU)__

---

> __Perceiving Humans: from Monocular 3D Localization to Social Distancing__

---

> __Perceiving Humans: from Monocular 3D Localization to Social Distancing__

> _[L. Bertoni](https://scholar.google.com/citations?user=f-4YHeMAAAAJ&hl=en), [S. Kreiss](https://www.svenkreiss.com),

[A. Alahi](https://scholar.google.com/citations?user=UIhXQ64AAAAJ&hl=en)_, T-ITS 2021

__[Article](https://arxiv.org/abs/2009.00984)__ __[Citation](#Citation)__ __[Video](https://www.youtube.com/watch?v=r32UxHFAJ2M)__

---

> __MonoLoco: Monocular 3D Pedestrian Localization and Uncertainty Estimation__

---

> __MonoLoco: Monocular 3D Pedestrian Localization and Uncertainty Estimation__

> _[L. Bertoni](https://scholar.google.com/citations?user=f-4YHeMAAAAJ&hl=en), [S. Kreiss](https://www.svenkreiss.com), [A.Alahi](https://scholar.google.com/citations?user=UIhXQ64AAAAJ&hl=en)_, ICCV 2019

__[Article](https://arxiv.org/abs/1906.06059)__ __[Citation](#Citation)__ __[Video](https://www.youtube.com/watch?v=ii0fqerQrec)__

## Library Overview

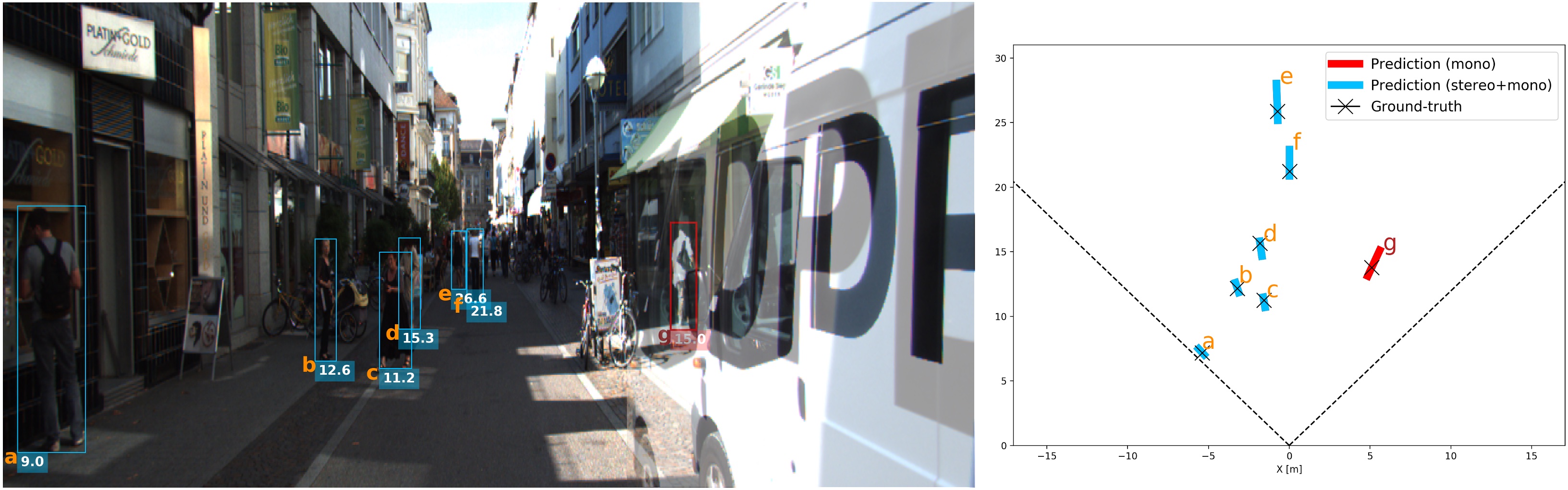

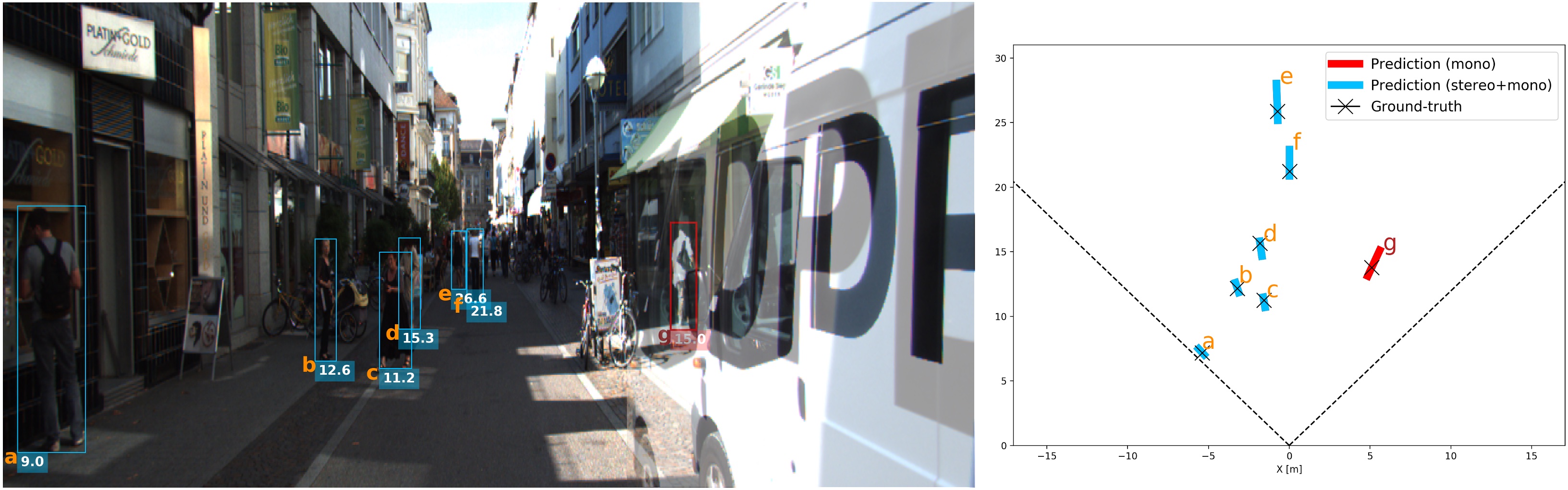

Visual illustration of the library components:

## Library Overview

Visual illustration of the library components:

## License

All projects are built upon [Openpifpaf](https://github.com/vita-epfl/openpifpaf) for the 2D keypoints and share the AGPL Licence.

This software is also available for commercial licensing via the EPFL Technology Transfer

Office (https://tto.epfl.ch/, info.tto@epfl.ch).

## Quick setup

A GPU is not required, yet highly recommended for real-time performances.

The installation has been tested on OSX and Linux operating systems, with Python 3.6, 3.7, 3.8.

Packages have been installed with pip and virtual environments.

For quick installation, do not clone this repository, make sure there is no folder named monoloco in your current directory, and run:

```

pip3 install monoloco

pip3 install matplotlib

```

For development of the source code itself, you need to clone this repository and then:

```

pip3 install sdist

cd monoloco

python3 setup.py sdist bdist_wheel

pip3 install -e .

```

### Interfaces

All the commands are run through a main file called `run.py` using subparsers.

To check all the options:

* `python3 -m monoloco.run --help`

* `python3 -m monoloco.run predict --help`

* `python3 -m monoloco.run train --help`

* `python3 -m monoloco.run eval --help`

* `python3 -m monoloco.run prep --help`

or check the file `monoloco/run.py`

# Predictions

The software receives an image (or an entire folder using glob expressions),

calls PifPaf for 2D human pose detection over the image

and runs Monoloco++ or MonStereo for 3D localization &/or social distancing &/or orientation

**Which Modality**

## License

All projects are built upon [Openpifpaf](https://github.com/vita-epfl/openpifpaf) for the 2D keypoints and share the AGPL Licence.

This software is also available for commercial licensing via the EPFL Technology Transfer

Office (https://tto.epfl.ch/, info.tto@epfl.ch).

## Quick setup

A GPU is not required, yet highly recommended for real-time performances.

The installation has been tested on OSX and Linux operating systems, with Python 3.6, 3.7, 3.8.

Packages have been installed with pip and virtual environments.

For quick installation, do not clone this repository, make sure there is no folder named monoloco in your current directory, and run:

```

pip3 install monoloco

pip3 install matplotlib

```

For development of the source code itself, you need to clone this repository and then:

```

pip3 install sdist

cd monoloco

python3 setup.py sdist bdist_wheel

pip3 install -e .

```

### Interfaces

All the commands are run through a main file called `run.py` using subparsers.

To check all the options:

* `python3 -m monoloco.run --help`

* `python3 -m monoloco.run predict --help`

* `python3 -m monoloco.run train --help`

* `python3 -m monoloco.run eval --help`

* `python3 -m monoloco.run prep --help`

or check the file `monoloco/run.py`

# Predictions

The software receives an image (or an entire folder using glob expressions),

calls PifPaf for 2D human pose detection over the image

and runs Monoloco++ or MonStereo for 3D localization &/or social distancing &/or orientation

**Which Modality**

The command `--mode` defines which network to run.

- select `--mode mono` (default) to predict the 3D localization of all the humans from monocular image(s)

- select `--mode stereo` for stereo images

- select `--mode keypoints` if just interested in 2D keypoints from OpenPifPaf

Models are downloaded automatically. To use a specific model, use the command `--model`. Additional models can be downloaded from [here](https://drive.google.com/drive/folders/1jZToVMBEZQMdLB5BAIq2CdCLP5kzNo9t?usp=sharing)

**Which Visualization**

- select `--output_types multi` if you want to visualize both frontal view or bird's eye view in the same picture

- select `--output_types bird front` if you want to different pictures for the two views or just one view

- select `--output_types json` if you'd like the ouput json file

If you select `--mode keypoints`, use standard OpenPifPaf arguments

**Focal Length and Camera Parameters**

Absolute distances are affected by the camera intrinsic parameters.

When processing KITTI images, the network uses the provided intrinsic matrix of the dataset.

In all the other cases, we use the parameters of nuScenes cameras, with "1/1.8'' CMOS sensors of size 7.2 x 5.4 mm.

The default focal length is 5.7mm and this parameter can be modified using the argument `--focal`.

## A) 3D Localization

**Ground-truth comparison**

If you provide a ground-truth json file to compare the predictions of the network,

the script will match every detection using Intersection over Union metric.

The ground truth file can be generated using the subparser `prep`, or directly downloaded from [Google Drive](https://drive.google.com/file/d/1e-wXTO460ip_Je2NdXojxrOrJ-Oirlgh/view?usp=sharing)

and called it with the command `--path_gt`.

**Monocular examples**

For an example image, run the following command:

```sh

python3 -m monoloco.run predict docs/002282.png \

--path_gt names-kitti-200615-1022.json \

-o

---

> __Perceiving Humans: from Monocular 3D Localization to Social Distancing__

---

> __Perceiving Humans: from Monocular 3D Localization to Social Distancing__ ---

> __MonoLoco: Monocular 3D Pedestrian Localization and Uncertainty Estimation__

---

> __MonoLoco: Monocular 3D Pedestrian Localization and Uncertainty Estimation__ ## Library Overview

Visual illustration of the library components:

## Library Overview

Visual illustration of the library components:

## License

All projects are built upon [Openpifpaf](https://github.com/vita-epfl/openpifpaf) for the 2D keypoints and share the AGPL Licence.

This software is also available for commercial licensing via the EPFL Technology Transfer

Office (https://tto.epfl.ch/, info.tto@epfl.ch).

## Quick setup

A GPU is not required, yet highly recommended for real-time performances.

The installation has been tested on OSX and Linux operating systems, with Python 3.6, 3.7, 3.8.

Packages have been installed with pip and virtual environments.

For quick installation, do not clone this repository, make sure there is no folder named monoloco in your current directory, and run:

```

pip3 install monoloco

pip3 install matplotlib

```

For development of the source code itself, you need to clone this repository and then:

```

pip3 install sdist

cd monoloco

python3 setup.py sdist bdist_wheel

pip3 install -e .

```

### Interfaces

All the commands are run through a main file called `run.py` using subparsers.

To check all the options:

* `python3 -m monoloco.run --help`

* `python3 -m monoloco.run predict --help`

* `python3 -m monoloco.run train --help`

* `python3 -m monoloco.run eval --help`

* `python3 -m monoloco.run prep --help`

or check the file `monoloco/run.py`

# Predictions

The software receives an image (or an entire folder using glob expressions),

calls PifPaf for 2D human pose detection over the image

and runs Monoloco++ or MonStereo for 3D localization &/or social distancing &/or orientation

**Which Modality**

## License

All projects are built upon [Openpifpaf](https://github.com/vita-epfl/openpifpaf) for the 2D keypoints and share the AGPL Licence.

This software is also available for commercial licensing via the EPFL Technology Transfer

Office (https://tto.epfl.ch/, info.tto@epfl.ch).

## Quick setup

A GPU is not required, yet highly recommended for real-time performances.

The installation has been tested on OSX and Linux operating systems, with Python 3.6, 3.7, 3.8.

Packages have been installed with pip and virtual environments.

For quick installation, do not clone this repository, make sure there is no folder named monoloco in your current directory, and run:

```

pip3 install monoloco

pip3 install matplotlib

```

For development of the source code itself, you need to clone this repository and then:

```

pip3 install sdist

cd monoloco

python3 setup.py sdist bdist_wheel

pip3 install -e .

```

### Interfaces

All the commands are run through a main file called `run.py` using subparsers.

To check all the options:

* `python3 -m monoloco.run --help`

* `python3 -m monoloco.run predict --help`

* `python3 -m monoloco.run train --help`

* `python3 -m monoloco.run eval --help`

* `python3 -m monoloco.run prep --help`

or check the file `monoloco/run.py`

# Predictions

The software receives an image (or an entire folder using glob expressions),

calls PifPaf for 2D human pose detection over the image

and runs Monoloco++ or MonStereo for 3D localization &/or social distancing &/or orientation

**Which Modality**